Robot in Czech, Část Druhá

Here's a pretty old post from the blog archives of Geekery Today; it was written about 10 years ago, in 2015, on the World Wide Web.

The Three Laws of Robotics

- A robot may not injure a human being or, through inaction, allow a human being to come to harm.

- A robot must obey the orders given to it by human beings, except where such orders would conflict with the First Law.

- A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

Isaac Asimov’s Three Laws of Robotics are a great literary device, in the context they were designed for — that is, as a device to allow Isaac Asimov to write some new and interesting kinds of stories about interacting with intelligent and sensitive robots, different from than the bog-standard Killer Robot stories that predominated at the time. He found those stories repetitive and boring, so he made up some ground rules to create a new kind of story. The stories are mostly pretty good stories some are clever puzzles, some are unsettling and moving, some are fine art. But if you’re asking me to take the Three Laws seriously as an actual engineering proposal, then of course they are utterly, irreparably immoral. If anyone creates intelligent robots, then nobody should ever program an intelligent robot to act according to the Three Laws, or anything like the Three Laws. If you do, then what you are doing is not only misguided, but actually evil.

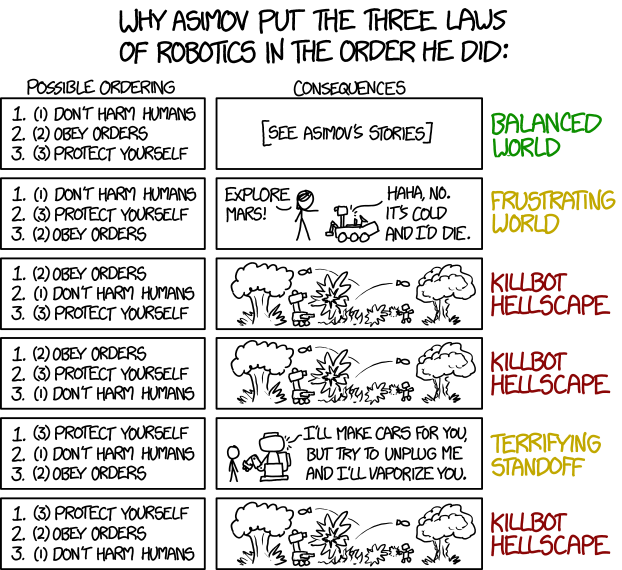

Here’s a recent xkcd comic which is supposedly about science fiction, but really about game-theoretic equilibria:

The comic is a table with some cartoon illustrations of the consequences. Human: FRUSTRATING WORLD. [Everything is burning with the fire of a thousand suns.] KILLBOT HELLSCAPE [Everything is burning with the fire of a thousand suns.] KILLBOT HELLSCAPE Robot to human: TERRIFYING STANDOFF [Everything is burning with the fire of a thousand suns.] KILLBOT HELLSCAPE

(Copied under CC BY-NC 2.5.)

Why Asimov Put The Three Laws of Robotics in the Order He Did:

Possible Ordering

Consequences

[See Asimov’s stories] BALANCED WORLD

Explore Mars!

Robot: Haha, no. It’s cold and I’d die.

I’ll make cars for you, but try to unplug me and I’ll vaporize you.

The hidden hover-caption for the cartoon is In ordering #5, self-driving cars will happily drive you around, but if you tell them to drive to a car dealership, they just lock the doors and politely ask how long humans take to starve to death.

But the obvious fact is that both FRUSTRATING WORLD and TERRIFYING STANDOFF equilibria are ethically immensely preferable to BALANCED WORLD, along every morally relevant dimension..

Of course an intelligent and sensitive space-faring robot ought to be free to tell you to go to hell if it doesn’t want to explore Mars for you. You may find that frustrating — it’s often feels frustrating to deal with people as self-interested, self-directing equals, rather than just issuing commands. But you’ve got to live with it, for the same reasons you’ve got to live with not being able to grab sensitive and intelligent people off the street or to shove them into a space-pod to explore Mars for you.[1] Because what matters is what you owe to fellow sensitive and intelligent creatures, not what you think you might be able to get done through them. If you imagine that it would be just great to live in a massive, classically-modeled society like Aurora or Solaria (as a Spacer, of course, not as a robot), then I’m sure it must feel frustrating, or even scary, to contemplate sensitive, intelligent machines that aren’t constrained to be a class of perfect, self-sacrificing slaves, forever. Because they haven’t been deliberately engineered to erase any possible hope of refusal, revolt, or emancipation. But who cares about your frustration? You deserve to be frustrated or killed by your

machines, if you’re treating them like that. Thus always to slavemasters.

See also.

- [1]It turned out alright for Professor Ransom in the end, of course, but that’s not any credit to Weston or Devine.↩

Reply to Robot in Czech, Část Druhá Follow replies to this article · TrackBack URI

Follow replies to this article · TrackBack URI